Operating Systems and UNIX

Contents

1. Operating Systems and UNIX#

We call the collection of software that we use to make a “computer” “useful” an operating system. But what exactly does this mean?

1.1. Computer#

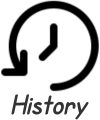

Today a computer can take many forms from desktop Personal Computers (PCs), laptops, mobile phones, tablets, building scale super-computers, etc. In some sense the defining property we care about is that the device supports a classic model for programming it – we call this model the von Neumann architecture. We will discuss this model in much more detail when we start considering exactly how software and hardware work with respect to assembly language. Below illustrates how we will visually represent a generic von Neumann based computer and summarizes what we need to know for now.

|

Central Processing Unit (CPU):

the smart bits that “execute” the instructions of software, also called the processor.

Memory

the devices that hold the instructions and data that make up the running software

physically connected to the CPU via direct wiring – often referred to as RAM, main memory, etc

fast, power hungry and volatile (values are lost when electricity is lost)

I/O devices – for the moment we only consider two categories

Storage devices:

hard drives, ssd’s, flash memory, flash drives/usb sticks, etc

slow and large compared to main memory

requires much more complicated programming to access its data compared to Memory

non-volatile (keep their values even when they don’t have electricity)

Communication devices:

allow connections to the outside world

networks, terminals, usb devices – keyboards, mice, etc.

For the moment it suffices to say that programming a computer means being able to apply your knowledge of a programming language and existing software to have the computer do something you want it to. For example, edit photos, play music, maintain a list of grocery items, calculate PI, send email, browse the web, test if a molecule might have promise as a new vaccine, automatically adjust the temperature of your home, control a car, etc.

1.1.1. Programmer vs User#

To be honest many people simply care that the computer can run existing programs – we will call these folks “Users”. However, “Programmers” are a special type of user. Programmers use Operating Systems, and other software tools to create new programs themselves. As a matter of fact some programmers simply focus on developing programs that make writing programs easier – like the folks that develop the operating system software, programming languages, editors, etc.

1.2. Useful#

So from our perspective, the main aspects of the operating system, that we care about, is that it makes it ease for programmers to create new programs. In addition we would like an operating system that does not hide things from us so that we can explore how programs (and computers) work.

Operating Systems are designed and created with specific uses and hardware in mind. Some are designed for special purpose computers and the programs that are expected to run on them like computers built into an airplane to control its various parts or a super-computer which is expected to run complex and large scientific simulation programs. While other operating systems are designed primarily to enable users to install and run commercial grade programs on their personal devices. These operating systems while they do enable programmers to write new programs, they are designed to hide most of these aspects from their “normal” target user. Those users mainly just wants to use programs written by others. The interface of these operating systems tend to focus on graphics and visually oriented ways of interacting with programs.

1.3. UNIX#

UNIX on the other hand assumes that its users are primarily programmers. Its design and collection of tools are not meant to be particularly user friendly. Rather UNIX is designed to allow programmers to be very productive and to support a broad range of programming tools. Many of the concepts and mechanisms of UNIX underly, are in, other operating systems but have been hidden or obscured to make things easier for their non-programmer users.

UNIX on the other hand assumes that its users are primarily programmers. Its design and collection of tools are not meant to be particularly user friendly. Rather UNIX is designed to allow programmers to be very productive and to support a broad range of programming tools. Many of the concepts and mechanisms of UNIX underly, are in, other operating systems but have been hidden or obscured to make things easier for their non-programmer users.

1.3.1. An operating System Built by Programmers for Programmers#

The UNIX operating system was built by master programmers who valued programmability and productivity. In some sense learning to work on the UNIX systems is a right of passage that not only teaches you how to be productive on a computer running the UNIX operating system but teaches you to think and act like a programmer.

Given its programming focus UNIX is organized around two categories of software – kernel and user. The kernel is a component of the UNIX that users of UNIX do not directly interact with. It is the core software that is used to manage the various devices of the computer and construct an environment that allows programmers to create new users programs. The idea is that very little is really built into UNIX rather its users are encouraged to write lots of small programs that build on the functionality of the kernel to do useful things. The kernel’s main job is to provide the ability to run new program, provide those programs with access to the hardware, and provide common ways for programs to interconnect, work together.

I know this all sounds a bit recursive – welcome to computer science. As we start interacting with UNIX it will make more sense.

Unix recently celebrated its 50th year Anniversary.

50 Years of Unix

The people and its history are a fascinating journey into how we have gotten to where we are today.

The Strange birth and long life of Unix

UNIX and it’s children literally make our digital world go around and will likely continue to do so for the quite some time.

1.3.2. The Kernel#

The Kernel is the bottom layer of software that has direct access to all the hardware. There is a single instance of the kernel that bootstraps the hardware. It provides a means for starting application programs (often also called user programs). The kernel performs and provides several important abilities.

The kernel enables several user programs to run at the same time.

The kernel keeps each running user program isolated from each other – such that each program can behave as if it is the only program running.

The kernel provides facilities for managing the running programs, eg. listing, pausing, terminating, etc.

While user programs can be started and can end the kernel is always present and running. If it ends it means the your computer has crashed!

The kernel provides a collection of ever present functions and objects that programs/programmers can rely on:

it provides core “software” “abstractions” packaged in the form of a library of kernel functions

these functions make it easier for programs to use the hardware

the kernel ensures that these functions, behaves consistently across different hardware

these functions hides the details and complexity of the hardware devices from the programs and thus the programmers who wrote them. For example a Unix kernel provides programs with the generic abstraction of a “file” that allows programs to create, store and recall data on storage devices without ever needing to know any details of the devices themselves.

A critical point of the Unix design: Humans do not and cannot directly interact with the kernel, only User Programs can interact with the kernel by invoking kernel functions. This design promotes and encourages that the rest of the operating systems and for that matter all other software be viewed as separated from the kernel. As such it encourages a building block approach where several alternatives for any given OS feature, like human interface programs, can be added, supported and explored while the kernel remains without change. The goal is to empower programmers to grow and extend the system in a natural and seamless way.

It is worth noting most modern operating systems have adopted this basic organization where there is a base kernel component and a large collection of pre-packaged user programs.

1.3.3. User Programs#

Largely what most people think of when they think of an OS is the large body of pre-packaged user programs that can be installed with the kernel of the OS. Typically there are at least three categories of user programs, including:

Display Servers: These are collections of programs designed to operate specific devices for human interaction such as graphical screens, keyboards, mice, touch pads, touch screens, etc. The first layer of this software (after the kernel) is typically some form of Windowing System. Examples include Microsoft’s Desktop Window Manager, Apple’s Quartz Compositor and the X Window System traditionally used by Unix operating systems. Today we are also seeing the rise of using web-browsers to present a graphical interface system this includes Jupyter Lab which we make use of. However, as in the case of Unix, older systems they typically retain support for their rich older ASCII terminal devices in the form of command line shell programs (Shell).

File Managers: Programs that work with a Display Server and provide a human the ability to explore and find information about the other programs and data installed. Examples include Apple’s Finder and Microsoft’s File Manager.

Other: There is a highly variable collection of software that form the rest of the user programs. This body largely depending on the OS and its intended target audience. In the case of OS’s that are geared to personal computers this set of software includes: media programs, web-browsers, productivity applications and more. While not always standard, the tools for developing new programs also fall in this category. These include program editors, programming languages and debuggers. A hallmark of Unix is the rich body of programming tools associated with it (including the tools to rebuild the kernel itself).

One of the distinguishing feature of Unix is its standard user command line programs that are geared to programmers (https://en.wikipedia.org/wiki/List_of_Unix_commands). While they lack graphical interfaces and are ASCII/command line oriented they form a very rich and powerful set of building blocks that allow a knowledgeable programmer to rapidly create turnkey solutions including the; text searching and transformation, data wrangling, automation, as well as the development and debugging of large applications.

1.3.4. Visualizing a Unix system#

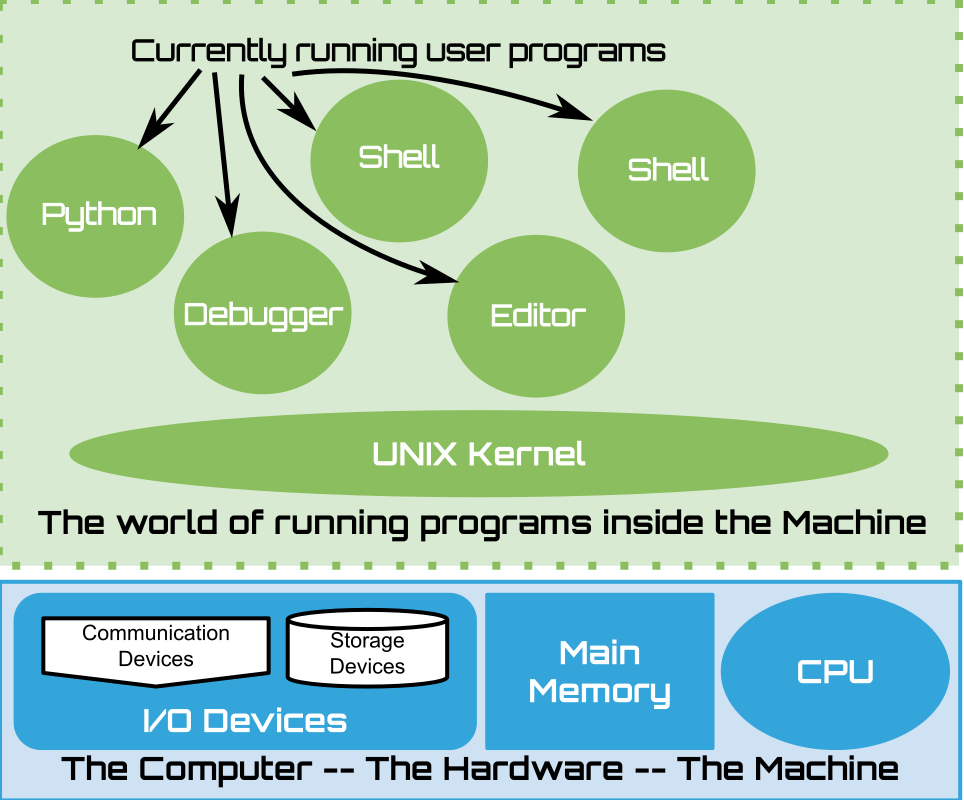

|

We visualized a running Unix system with the Hardware of the computer at the bottom, shaded blue, and the running software, shaded in green, above it. The Unix Kernel is a persistent program that forms the foundation for launching and running instances of user programs and is illustrated as the green oval labeled "UNIX Kernel". The smaller green circles represent the currently running programs that have been started. Each time a program is started we visualize a new circle representing a new running instances of the program. In the diagram each is labeled with the program that was used to start it. Each instance is independent and has its own "life-time". If a specific running instance of a program ends, crashes or is terminated (killed) by the user we would remove its circle. So while in reality we can't physically see the programs running we none the less think of the running software as forming a world of its own, inside the machine, with the kernel enabling its construction and management.

1.3.4.1. Processes and Executables#

In UNIX a running program is called a process. A program that a process can be created from is called and executable. In some sense this book is a journey into understanding what exactly these are, how they relate to each other, the operating system and ultimately the hardware.

1.3.5. Benefits to studying UNIX#

UNIX’s terminal interface and program development environment became the gold standard for university Computer Science education. The following are some of the reasons it became so and why it continues to be critical both academically, scientifically and industrially.

The most basic interface it presents, the Shell, is a programmer oriented model for interacting with the computer. Furthermore Unix comes with a large collection of composable and extensible tools for processing ASCII documents that naturally integrate and extend the power of the Shell. These tools make it easy to write new programs including programs that translate ASCII documents, source code in one language, into source code of another language.

While it takes some effort to learn its strange command line interface, doing so teaches you to think like a programmer. Where you are encouraged to writing little re-usable programs that you incrementally evolve as needed and combine with others to get big tasks done. Generally trying to avoid any particular program from getting too complicated.

UNIX also does is not prescriptive on how you should do things. Rather it provides a large collection of simple building blocks that you can learn to creatively use to meet your needs. The investment in learning its building blocks and models for composing them minimizes time and effort in going from an idea to a prototype. UNIX’s programming oriented nature leads to an environment in which almost anything about the OS and user experience can be customized and programmed. UNIX makes automation the name of the game – largely everything you can do manually can be turned into a program that automates the task.

UNIX has instilled in generations of computer scientists a basic aesthetic for how to design and structure complicated collections of software. In particular one learns that the designers of UNIX tried to structure the system around a small core set of ideas, “abstractions”, that once understood allows a programmer to understand the rest of the system and how to get things done. In UNIX this set includes: Files, Processes, I/O redirection, Users and Groups. All of which we will cover late in this book.

UNIX’s programming friendly nature has lead to the development of a very large and rich body of existing software for UNIX. With contributions coming from researchers, industry, students and hobbyists alike. This body of software has come to be a large scale shared human repository that we rely on heavily. The computer servers that form the core of the Internet and the Cloud largely run a UNIX variant called Linux. Many of the computers embedded in the devices that surround us from wifi routers, medical devices, automobiles, and everything else also often run a version of Linux. But perhaps most critically UNIX, in the form of Linux, is a corner stone of the Open Source software ecosystem.

1.4. Bottomline#

UNIX comes with the kernel and a large collection of existing programs that permit a programmer to write more programs, that they and others can use. In this book we will focus on what a program really is, how they are constructed and along the way the core tools, skills and ideas that underly all of computing. Given UNIX’s programmer focus and transparency it is a good choice for us. Furthermore, learning how to be productive in the UNIX environment will teach us a lot about being productive programmers period.

What is LINUX?: Linux or more formally GNU/Linux is an open source variant of the UNIX operating system that is in heavy use today. Every imaginable type of computer runs Linux. In some sense it has become the computing equivalent of both “The Force” and “Duct Tape”. It is worth noting that Linux began its life because a University student got interested in Operating Systems and was frustrated with the current “Closed” state of the art.